Demo: Deferred Lighting in OpenGL

Posted: Sun Oct 04, 2009 1:17 pm

This one is a quick & dirty demo of a typical fat-gbuffer approach to deferred lighting using OpenGL and GLSL.

By deferring the lighting until after the primary geometry passes, we are allowed the headroom to calculate a lambertian lighting equation many hundreds of times in screen space per frame. The practical limit for light count in this demo is about 2056. So you can have up to 2056 visible dynamic lights in the scene at any one time.

So we make a primary pass of scene geometry to the gbuffer. We render to two buffers at one time currently. We render the viewspace normal and surface depth to one target, and the diffuse color to the other. In a second pass we sort the lights based on visibility and whether the camera is inside the light or not, then we stream the lights in as simple boxes which are the size of the light radius. With this vertex format we also pass camera position, light position, light color, light radius, and attenuation parameters. As the lights are streamed in, the world in view space is reconstructed and the result is passed per pixel to the back buffer.

Because this is a pre-cursor to a proof of concept demo, this obviously does not make use of MSAA.

The source code and executable can be found at:

http://www.moogenstudios.com/wp-content ... ferred.rar

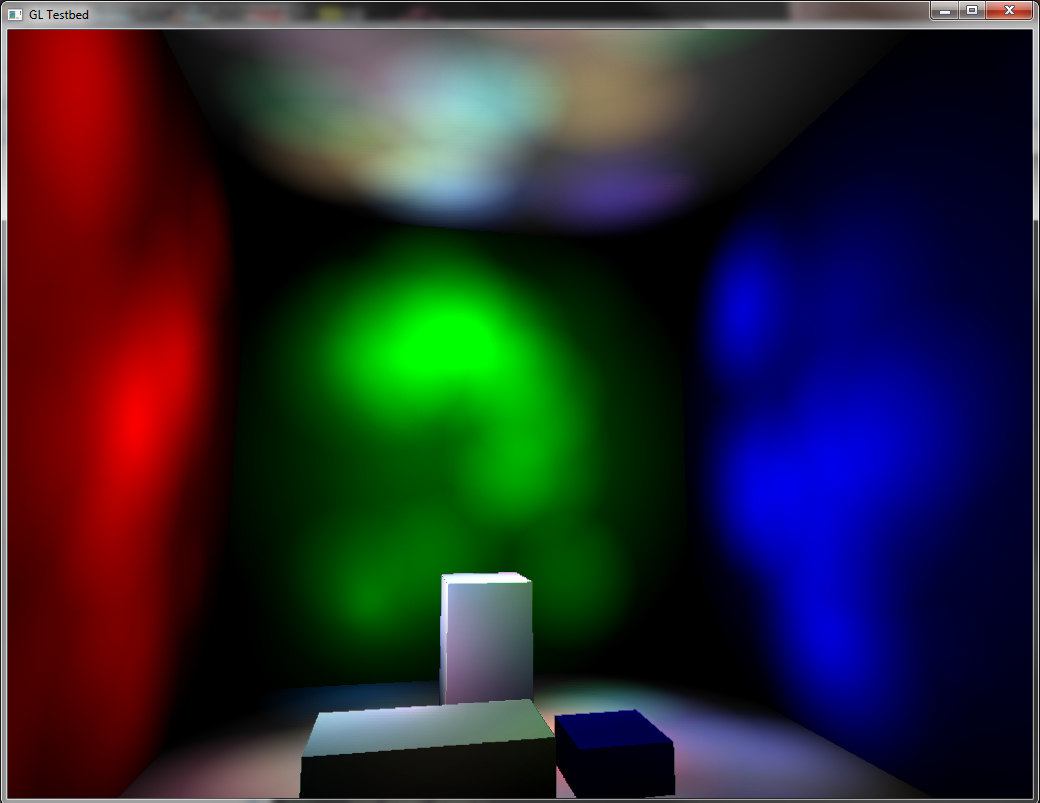

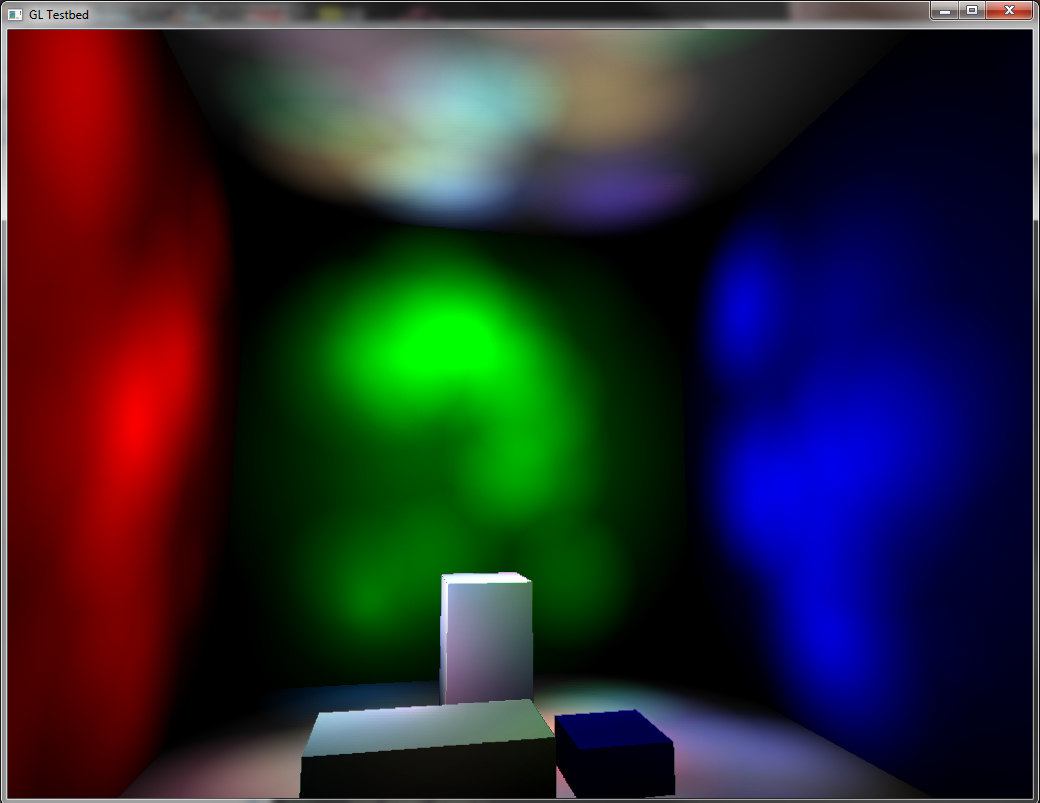

Single Point Light:

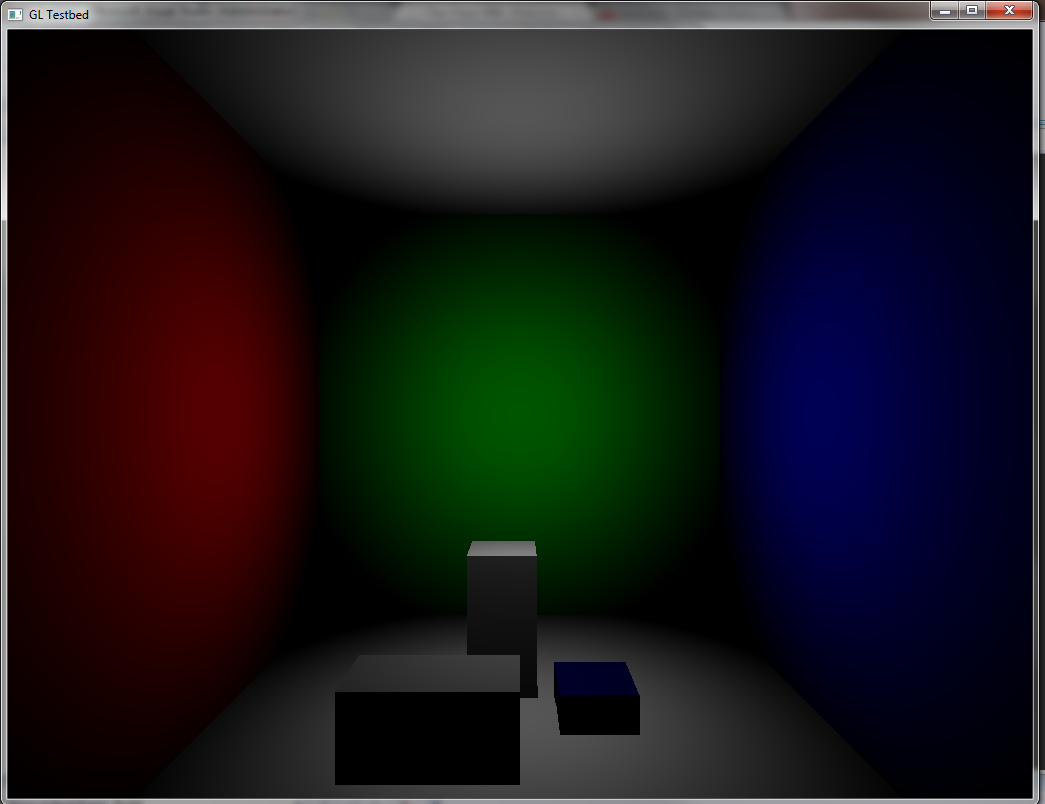

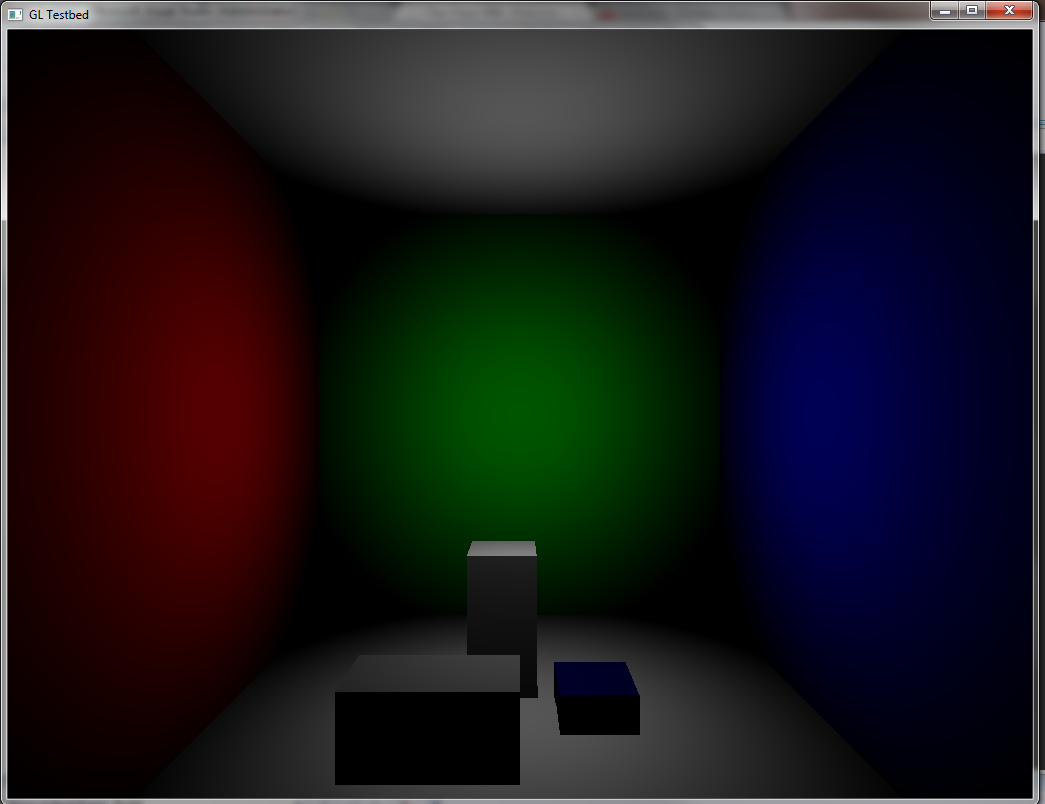

Several hundred point lights:

By deferring the lighting until after the primary geometry passes, we are allowed the headroom to calculate a lambertian lighting equation many hundreds of times in screen space per frame. The practical limit for light count in this demo is about 2056. So you can have up to 2056 visible dynamic lights in the scene at any one time.

So we make a primary pass of scene geometry to the gbuffer. We render to two buffers at one time currently. We render the viewspace normal and surface depth to one target, and the diffuse color to the other. In a second pass we sort the lights based on visibility and whether the camera is inside the light or not, then we stream the lights in as simple boxes which are the size of the light radius. With this vertex format we also pass camera position, light position, light color, light radius, and attenuation parameters. As the lights are streamed in, the world in view space is reconstructed and the result is passed per pixel to the back buffer.

Because this is a pre-cursor to a proof of concept demo, this obviously does not make use of MSAA.

The source code and executable can be found at:

http://www.moogenstudios.com/wp-content ... ferred.rar

Single Point Light:

Several hundred point lights: